5.1 Symptoms Observed in Legacy RAG Deployments

Many companies want to build AI search tools, but their document libraries fight back in several ways. Different versions of the same file sit side‑by‑side, so a query about a product might pull facts from version 15, 16, and even an unreleased 17 all at once. Salespeople often copy an old proposal, tweak a few lines, hit "save as" and upload it, so a three‑year‑old file looks brand‑new and slips past any "use only recent files" filter.

Even if only five percent of a 100,000‑document collection changes every six months, millions of pages would still need checking—far too much for people to manage by hand. Tech teams then slice each document into fixed‑size chunks to fit into a vector database, but the chunks are not granular or precise and this approach can split important ideas in half, mix in off‑topic sentences, and create many almost‑identical chunks that crowd out more relevant ones.

When the retrieval system feeds these messy snippets to the language model, the model tries to merge them and can end up inventing specs, prices, or legal clauses that never existed. Meanwhile, most vector stores cannot tag data with fine‑grained permissions like "export‑controlled" or "partner‑only," leaving security holes. All of this adds huge maintenance costs and makes leaders hesitate to roll AI systems into full production.

The following symptoms are consistently reported when enterprises rely on the "dump-and-chunk" approach (fixed-length chunking → vectorize → retrieve) without upstream data governance.

Data-Quality Drift

- Version Conflicts

Example: An Computer Infrastructure provider's server specs return v15, v16, and unreleased v17 in a single answer because old proposal PDFs coexist with new product sheets.

- Stale Content Masquerading as Fresh

"Save-As" behavior: sales staff copy a three-year-old proposal, tweak a few lines, and upload it. The file carries a "last-modified" date of last week, defeating any date-filter in the retrieval pipeline.

- Untrackable Change Rate

Even with a modest 5% change to a 100k-document corpus every six months, more than millions of pages would require review annually—well beyond human capacity.

Semantic Fragmentation from Naïve Chunking

- Broken Concepts

Fixed 1,000-character windows routinely split longer paragraphs with important context such as a product value proposition in half, degrading data quality and cosine similarity between query and chunk.

- Context Dilution

Only 25%–40% of the information in a Naïve chunk may pertain to the user's intent; the rest introduce "vector noise," causing irrelevant chunks to score higher than precise ones.

Retrieval Noise & Hallucination Patterns

- Top-k Pollution

Because duplicates appear with slightly different embeddings, they crowd out more relevant chunks; k = 3 may return three near-identical, outdated passages instead of the more current accurate one.

- Model Guess-work

When conflicting chunks are fed to the LLM, it "hallucinates" a synthesis—often inventing specs, prices, or legal clauses that appear plausible but are unfounded.

Governance & Access-Control Gaps

- One-Size-Fits-All Index

Standard vector stores lack robust tags for data permissioning, export-control, clearance, or partner-specific sharing (e.g., restrict who can access "classified" information).

Operational & Cost Burden

- Human Maintenance on Datasets is Impossible

Locating and updating "paragraph 47 in document 59 of 1,000" for every single product update across every single document is infeasible in pilot scale, but also remember, it's not 1,000 documents in production, in reality it's 1,000,000 documents or more in production, causing stakeholders to freeze further AI rollout due to risk and update maintenance burdens.

5.2 Impact – Why "Just One Bad Answer" Can Cost Millions (or Lives)

Financial Repercussions

- Scenario 1 Mega‑Bid Meltdown: During a recent $2 billion infrastructure RFP, an LLM‑powered proposal assistant could mix legacy pricing (FY‑21) with current discount tables (FY‑24). The buyer flagged the inconsistency and disqualified the vendor on compliance grounds—a total write‑off of 18 months of pursuit costs and pipeline revenue.

- Scenario 2 Warranty & Recall Cascades: An electronics manufacturer published a chatbot‑generated BOM that incorporated an obsolete capacitor. Field failures triggered a $47 million recall and stiff penalties from downstream OEM partners.

- Scenario 3 Regulatory Fines: Under EU AI Act Article 10, companies must "demonstrate suitable data‑governance practices." Delivering a hallucinated clinical‑trial statistic led to a €5 million fine from the EMA and a forced product‑labelling change.

Operational & Safety Risks

- "Grounded Fleet" Scenario: A major Defense customer could experience that 4 of the top‑10 answers returned by a legacy RAG system referenced an outdated torque value for helicopter rotor bolts. Had the error propagated, every aircraft would have required emergency inspection—potentially stranding troops and costing $X million in downtime.

- Intelligence Failure: Conflicting country‑code names ("Operation SEA TURTLE" vs. "SEA SHIELD") in separate PDFs confused an analyst's threat‑brief, delaying a security response by X hours.

Strategic & Cultural Damage

- Erosion of Trust: Once users see a system hallucinate, uptake plummets; employees revert to manual searches, negating AI ROI.

- Innovation Freeze: Board‑level risk committees often impose a moratorium on GenAI roll‑outs after a single public mistake, stalling digital‑transformation road‑maps for quarters.

- Brand Hit: Social‑media virality of a bad chatbot answer can wipe out years of marketing investment.

5.3 Root Causes – The Mechanics Behind the Mayhem

Enterprise RAG initiatives fail less because of model quality and more because the underlying data pipeline lets chaos seep in at every stage. Product specs and policy language now change weekly, so even a modest 5% drift rate means one‑third of the knowledge base is obsolete within three years.

The same paragraph lives—slightly edited—in SharePoint, Jira, email, and vendor portals, while "save‑as syndrome" keeps stamping fresh timestamps onto stale proposals. With no single, governed taxonomy, subject‑matter experts can't "fix once, publish everywhere," and versioning scattered across silos makes audits or roll‑backs impossible.

The LLM is forced to guess its way through inconsistencies—amplifying hallucinations and potentially leaking classified text into public answers. Manually patching "paragraph 47 of document 59" across a million‑file corpus would take tens of thousands of labor hours, so errors linger, compound, and eventually freeze further AI roll‑outs.

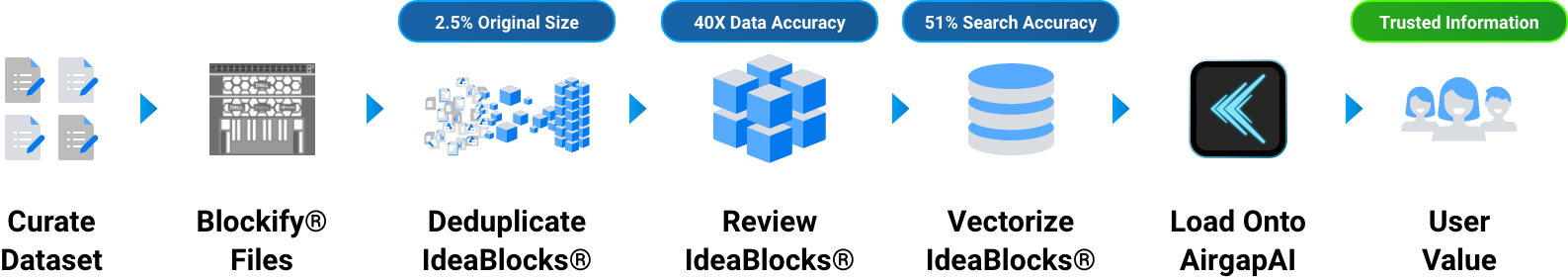

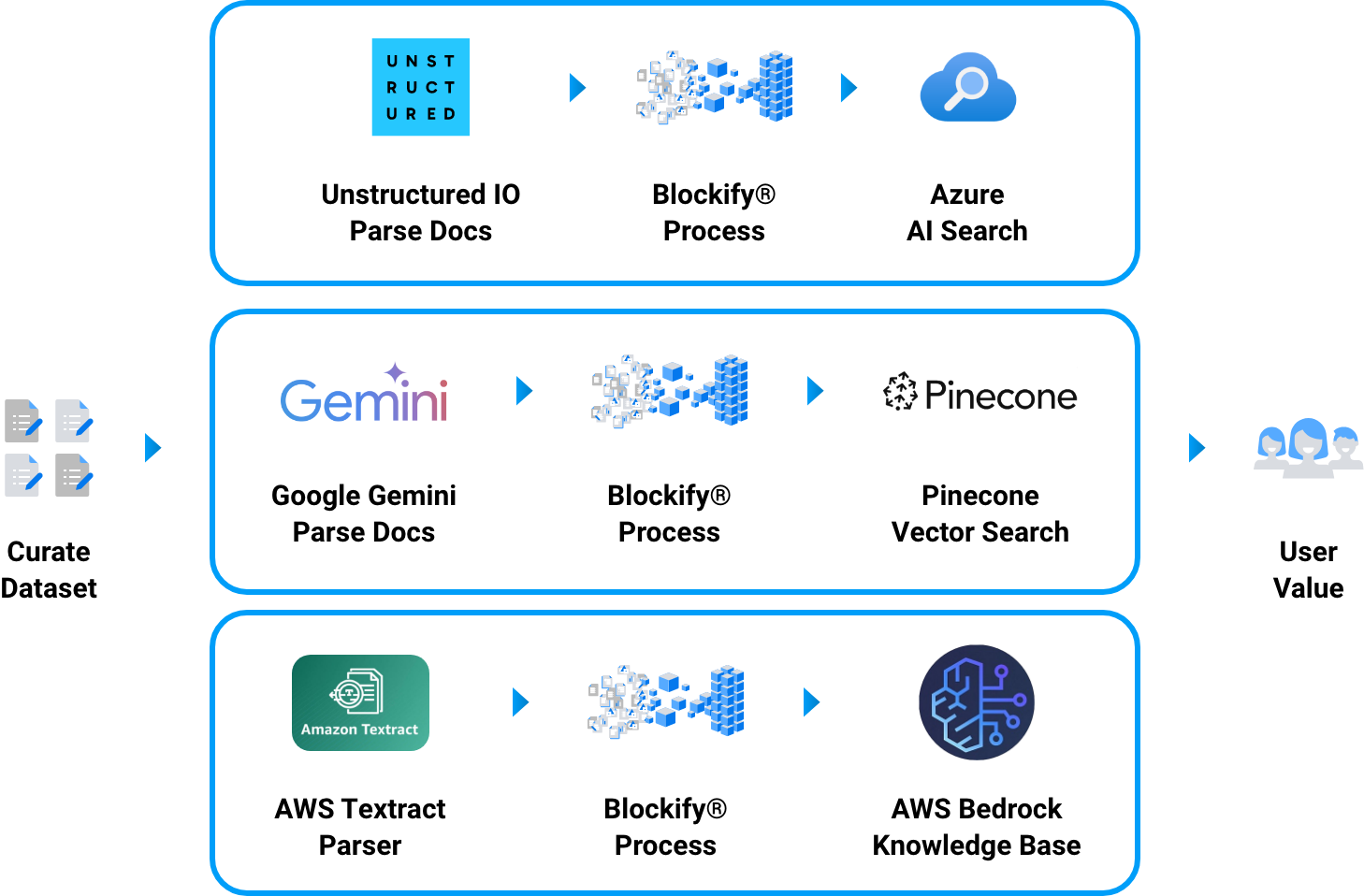

A preprocessing and governance layer such as Blockify® is required to restore canonical truth, enforce permissions, and deliver context‑complete, drift‑resistant blocks to downstream LLMs.

Listed are some of the major challenges outlined in detail by category.

- Accelerating Data Drift

- Product cadence keeps shrinking; SaaS revisions ship weekly, hardware every quarter. Even a "small" 5% drift every six months means that within three years roughly one‑third of a knowledge base is out‑of‑date.

- Regulatory churn (GDPR, CMMC, FDA 21 CFR Part 11) forces frequent wording changes that legacy pipelines never re‑index.

- Content Proliferation Without Convergence

- Same paragraph lives in SharePoint, Jira wikis, email chains, and vendor portals—each with slight edits.

- "Save‑As Syndrome" (salespeople cloning old proposals) multiplies duplicates with misleading "last‑modified" timestamps.

- Absence of a Governed "Single Source of Truth"

- No enterprise‑wide taxonomy linking key information to a master record; Subject Matter Experts (SMEs) cannot easily correct once, publish everywhere.

- Versioning spread across disparate repositories prevents atomic roll‑back or audit.

- Naïve Chunk‑Based Indexing

- Fixed‑length windows slice semantic units, destroying contextual coherence and crippling cosine similarity.

- Chunks inherit the security label of the parent file (or none at all). A single mixed‑classification slide deck can surface "SECRET" paragraphs to a "PUBLIC" query.

- Vector Noise & Embedding Collisions

- Near‑duplicate paragraphs occupy adjacent positions in vector space; retrieval engines return redundant or conflicting passages, increasing hallucination probability.

- Human‑Scale Maintenance Is Impossible

- Updating "paragraph 47 of document 59" across 1 million files would require tens of thousands of labor hours—economically infeasible, so errors persist and compound.

These intertwined root causes explain why legacy RAG architectures plateau in pilot, why hallucination rates stay stubbornly high, and why enterprises urgently need a preprocessing and governance layer such as Blockify® to restore trust and unlock GenAI value.